Summary (TL;DR)

- CROLabs is a simple A/B testing tool that lets marketers create variants visually and track goals—no developer needed.

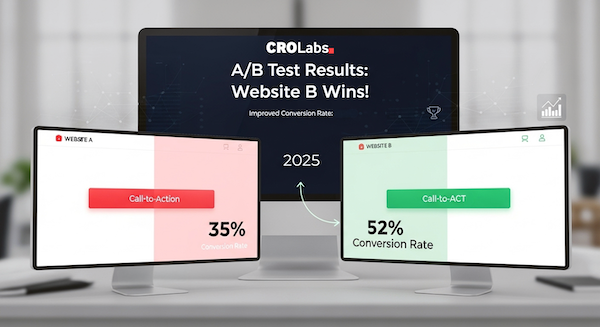

- A/B testing compares a control (A) vs. a variant (B) to see which version or experiment drives more of the results you want to achieve like conversions.

- Start fast: one goal → one hypothesis → one change → run the test → rollout the winner.

- For beginners, prioritize headline clarity, CTA wording/placement, offer framing, social proof, and form friction.

- Let tests run through a full business cycle, avoid mid-test edits, and watch for traffic split issues.

Table of Contents

Why A/B Testing Matters in 2025

As a marketer, founder, or e-commerce manager, you’re often surrounded by new ideas like introducing new headlines, changing your layouts, adjusting your offer or prices. A/B testing (also called split testing) turns those ideas into evidence. Instead of debating, you run a controlled experiment, measure real behavior, and keep what works. The result: you rollout the version that is driving the results that matter to you and your business which could be anything from more sign-ups, more sales, and ultimately fewer costly assumptions.

What Is A/B Testing (or Split Testing)?

A/B testing is a controlled experiment where at least two versions of a page or element are shown to different visitors at random:

- Version A (Control): your current experience.

- Version B (Variant): the same experience with one intentional change (e.g., button copy, headline, image).

You define a goal (clicks, sign-ups, purchases), split traffic, and compare outcomes. If the variant meaningfully outperforms the control, you roll out the winner.

A/B Testing for Marketers: What to Test First

For conversion rate optimization basics, start with improvements that clarify value and remove friction:

- Headline & value proposition

State who it’s for, what it does, and the primary benefit. E.g., “All-in-one invoicing for freelancers: get paid faster.” - Primary call-to-action (CTA)

Make the action explicit: “Get your free demo,” “Start 14-day trial,” “Add to cart.” Test placement (above the fold + after key sections) and microcopy beneath the button (“No credit card required”). - Offer framing & risk-reversal

Free shipping thresholds, a money-back guarantee, or time-boxed promos can tip hesitant visitors. - Social proof

Star ratings, short testimonials, usage counts, or “Most popular” badges build trust—especially near CTAs. - Form friction

Reduce fields, clarify what’s optional, show password rules inline, and offer guest checkout where relevant.

For e-commerce, also test image galleries, price anchoring, cross-sells vs. clean paths, and delivery transparency (fees, time).

Important: Don’t do more than one change at a time! As we are building hypothesis and want to learn from our changes, we should not introduce more than one variable. As “conversion rate optimizers”, we want to know exactly which change led to which results. If we put more than one variable into our equation, it is really hard if not impossible to find out what works best for our audience.

How to Start Split Testing: A Simple 5-Step Framework

Step 1 — Pick one goal on one page

Choose a single, measurable outcome: homepage “Request a demo” clicks, product page “Add to cart,” or checkout completion. Clear goals focus your effort and make results easy to interpret.

Step 2 — Write a sharp hypothesis

Use this template:

Because [observation], changing [element] for [audience] will increase [metric], measured by [goal event].

Example: Because “Submit” is vague, changing the CTA to “Get your free demo” for new visitors will increase demo clicks (button click event).

Step 3 — Create one meaningful variant

Change the one thing that best supports your hypothesis. If you must adjust a cluster (e.g., CTA copy + a 1-line reassurance under it), keep the change tightly scoped so you can attribute the lift.

Step 4 — Launch the test

Your A/B tool randomly splits traffic (e.g., 50/50) and records conversions. Start with all device types unless you already see big device behavior differences.

Step 5 — Analyze and ship the winner

When you’ve reached a clear result, deploy the winner to 100% of visitors. Archive the experiment and plan the next one. Small, steady wins compound.

Reading Results Without a Stats Degree

A few practical pointers keep you honest:

- Run through a full business cycle. Cover weekdays vs. weekends and typical demand patterns.

- Avoid “peeking” and mid-test edits. Stopping early on a lucky spike or changing the variant during the test can mislead you.

- Check sample ratio mismatch (SRM). If your split isn’t close to what you assigned (e.g., not ~50/50), investigate targeting, redirects, or tag firing.

- Look beyond the primary metric. If demo clicks rise but qualified leads fall, the new messaging may be attracting the wrong audience. Iterate thoughtfully.

- Document your learnings. Keep a simple log: hypothesis, variant, audience, dates, outcome, and what you learned. This builds institutional knowledge and trust.

Common Pitfalls (and Easy Fixes)

- Testing tiny details on low-traffic pages

If a page has limited traffic, test bigger moves (layout, offer, messaging) so you can reach a decision in a reasonable timeframe. - Running too many tests at once on overlapping audiences

Parallel tests that touch the same users can interfere with each other. Stagger them or bucket audiences. - Confusing speed with haste

Move quickly, but only ship when the result is clear and replicable. If results are inconclusive, refine the hypothesis and retest. - Declaring victory on vanity metrics

CTR improvements are great, but keep your eye on the metrics tied to revenue (purchases, qualified demos, subscriptions). - Declaring victory too early

Do not rely on too little data. Ensure that you base your decision on meaningful, big datasets reaching statistical significance.

How CROLabs Makes A/B Testing Simple (Truly No Developer Needed)

CROLabs is a simple A/B testing tool built for marketers, e-commerce teams, and founders who need results without engineering lift:

- Visual editor: point-and-click to change headlines, images, CTAs, or sections.

- One lightweight snippet: install once and launch experiments quickly.

- Goal tracking you can see: click the element or event you want to measure—“Add to cart,” “Start checkout,” “Request a demo.”

- Audience targeting: run tests for new vs. returning visitors, specific geos, or device types.

- Clean experiment lifecycle: start, monitor, ship the winner, and archive—keeping your site tidy and fast.

- Idea library for beginners: jump-start with vetted experiments for product pages, pricing, and checkout.

Stop guessing. Start learning what truly makes your visitors convert—with evidence.

Quick FAQ (Beginners)

How much traffic do I need?

There’s no single number, but more traffic reaches decisions faster. If a page has fewer than ~1,000 visits/month, focus on bigger changes to generate clearer signals or wait until you reach statistical significance according to our website stats.

How long should a test run?

Long enough to cover at least one full business cycle and reach a clear decision. Avoid ending early due to a short-term spike.

Should I test desktop and mobile separately?

Start together for simplicity, then review device-level results. If behavior differs, run follow-up tests per device.

Is multivariate testing better?

Not for beginners. Multivariate testing requires substantially more traffic. Master A/B tests first.

Ready to launch your first experiment? Create a free CROLabs account, set one clear goal, and publish your first A/B test today.